Please note. The information in this section represents a specification of intent and work in progress. Actual implementations using the language have partial implementation of the grammars and syntaxes described here.

Purpose background and rationale

Question: Yet another language? Surely not.

Answer: No, or at least, not quite.

The following sections first describe the purpose of a modelling language, the background to the Discovery approach and the rationale behind the approach adopted.

Subsequently the sections break down the various aspects of the language at ever increasing granularity, emphasising the relationship between the language fragments and the languages from which they are derived, resulting in the definition of the grammar of the language. The relationship between the language and a model store is also described.

Purpose of a language.

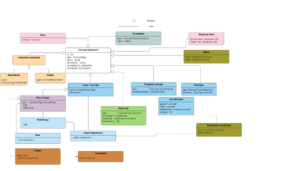

The main purpose of a modelling language is to exchange data and information about information models in a way that both machines and humans can understand. A language must be able to describe the types of structures that make up an information model as can be seen on the right.

It is necessary to support both human and machine readability so that a model can be validated both by humans and computers. Humans can read diagrams or text. Machines can read data bits. The two forms can be brought together as a stream of characters.

A purely human based language would be ambiguous, as all human languages are. A language that is both can be used to promote a shared understanding of often complex structures whilst enabling machines to process data in a consistent way.

It is almost always the case that a very precise machine readable language is hard for humans to follow and that a human understandable language is hard to compute consistently. As a compromise, many languages are presented in a variety of grammars and syntaxes, each targeted at different readers. The languages in this article all adopt a multi-grammar approach in line with this dual purpose.

Background

Information modelling covers three main business purposes: Inference, validation and enquiry. Health information modelling is no different.

Inference is pivotal to decision making. For example, if you are about to prescribe a drug containing methicillin to a patient, and the patient has previously stated that they are allergic to penicillin, it is reasonable to infer that if they take the drug, an allergic reaction might ensue, and thus another drug is prescribed.

Furthermore, the ability to classify concepts enables business decision making at individual and population level. Thus a modelling language must include the ability to infer things and produce classifications.

Data Validation is essential for consistent business operations. Data models, user input forms, and data set specifications are designed to enable data collections to be validated. Maintaining a standard for data collection is essential. For example, if a date of birth was not recorded in a patient record, the age of the patient could not be determined, and that would massively affect the probability of a disease or the outcome of a treatment. Also, if more than one date of birth was recorded for the same patient, it would be nearly as useless. Thus a modelling language must include the ability to constrain data models to suit particular business needs.

Enquiry (or query) is necessary to generate information from data. There is little point in recording data unless it can be interrogated and the results of the interrogation acted upon. Thus a modelling language must include the ability to query the data as defined or described, including the use of inference rules to find data that was recorded in one context for use in another.

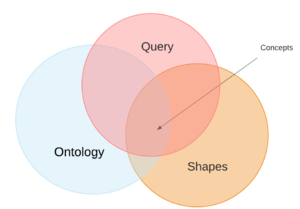

In order to meet these requirements a modelling language will contain the 3 aspects:

- To provide inference, a modelling language must support an ontology. The ontology defines the rules used in inference.

- To enable validation, a modelling language must be able define structures with specific properties. Properties defined in this way are constraints on what otherwise be infinite possibilities. These constraints result in schemas or business classes, which are used to hold actual data in objects. These constraints are referred to in this article as shapes.

- To enable query, a modelling language must include a query language of sufficient sophistication to retrieve the necessary information from the information model as well as real data that is modelled by the model.

There is overlap between these 3 aspects. Ideally, a language would cover these three aspects in an integrated manner and that is the case with the Health information Discovery modelling language.

Contributory languages

An information model can be modelled as a Graph i.e. a set of nodes and edges (nodes and relationships, nodes and properties). Likewise, health data can be modelled as a graph conforming to the information model graph.

The world standard approach to a language that models graphs is RDF, which considers a graph to be a series of interconnected triples, a triple consisting of the language grammar of subject, predicate and object. Thus the Discovery modelling language uses RDF as its fundamental basis, and can therefore be presented in the RDF grammars. The common grammars used in this article include TURTLE (terse RDF Triple language) and the more machine friendly JSON-LD (json linked data) which enables simple JSON identifiers to be contextualised in a way that one set of terms can map directly to internationally defined terms or IRIs.

RDF in itself holds no semantics whatsoever i.e. no one can infer or validate or query based purely on an RDF structure. To use it It is necessary to provide semantic definitions for certain predicates and adopt certain conventions to imply usage of the constructs. In providing those semantic definitions, the predicates themselves can then be used to semantically define many other things.

The three aspects alluded to above are covered by the logical inclusion of fragments, or profiles of a set of W3C semantic based languages, which are:

- OWL2, which is used for semantic definition and inference. In line with convention, only OWL2 EL is used and thus existential quantification and object intersection can be assumed in its treating of class expressions and axioms. The open world assumption inherent in OWL means it is very powerful for subsumption testing but cannot be used for constraints without abuse of the grammar.

- SHACL, which is used for data modelling constraint definitions. SHACL can also include OWL constructs but its main emphasis is on cardinality and value constraints. It is an ideal approach for defining logical schemas, and because SHACL uses IRIs and shares conventions with other W3C recommended languages it can be integrated with the other two aspects. Furthermore, as some validation rules require quite advanced processing SHACL can also include query fragments.

- SPARQL, GRAPHQL are both used for query. GRAPH QL, when presented in JSON-LD is a pragmatic approach to extracting graph results via APIs and its type and directive systems enables properties to operating as functions or methods. SPARQL is a more standard W3C query language for graphs but can suffer from its own in built flexibility making it hard to produce consistent results. SPARQL is included to the extent that it can be easily interpreted into SQL or other query languages. SPARQL with entailment regimes are in effect SPARQL query with OWL support.

- RDF itself. RDF triples can be used to hold objects themselves and an information model will hold many objects which are instances of the classes as defined above (e.g. value sets and other instances)

The information modelling services used by Discovery can interoperate using the above sub-languages, but Discovery also includes a language superset making it easy to integrate. For example it is easy to mix OWL axioms with data model shape constraints as well as value sets without forcing a misinterpretation of axioms.

Language and the information model APIs

The language (or languages) are a means to an end i.e. a human and machine readable means of exchanging information models and use of the language to interact with implementations of health records.

An information model is an abstract representation of data, but an information model must have content and that content must be stored.

Data cannot be stored conceptually, only physically, and thus there must be a relationship between the abstract model and a physical store.

In the information model services, the abstract model is instantiated as a set of objects of classes, the data element of those classes holding the subject, predicate and object structures. In reality those objects together with translation and data access methods are instantiated in some form of language. e.g. Java.

The physical store is currently held in a triple like relational database accessed by a relational database engine but could be easily stored as a native graph.

The model can then be used as the source and target of the exchange of data, the latter using a language interoperating via a set of APIs

This can be visualised as in the diagram on the right. It can be seen that the inner physical store, is accessed by an object model layer, which is itself accessed by APIs using modelling language grammar and syntax. The diagram shows the main grammars supported by the Discovery information model, including the Discovery information modelling language grammar itself.

Support for the main languages means that a Discovery information model instance has 2 levels of separation of concerns from the languages used to exchange data, and the underlying model store. There is thus no reason to buy into Discovery language to use the information model.

Likewise, an implementation of objects that hold data in a form that is compatible with a particular data model and ontology module, can be accessed using the same language.

This makes the language just as useful for exchanging query definitions, value sets as well as useful for actual query of health record stores via interpreters.

The remainder of this article describes the language itself, starting with some high level sections on the components, and eventually providing a specification of the language and links to technical implementations, all of which are open source.

The language building blocks

This section describes the approach to the design of the grammars of the language, including how the sublanguages are incorporated into a single whole without loss of meaning.

The description is divided into paragraphs and subsections which follow a set of decisions from the basic fundamentals to the final grammar specification held in a separate article.

Data as a Graph

Data is conceptualised as a graph and thus the model of a data is a graph. Consequently the language used must support graphs.

A graph is considered as a set of nodes and edges. All nodes must have at least one edge and each edge must be connected to at least two nodes. Thus the smallest graph must have at least 3 entities.

Human and machine readable

The model must be both human and machine readable. The decision is to represent a graph using the recognisable plain language characters in UTF-8. For human readability the characters read from left to right and for machine readability a graph is a character stream from beginning to end.

Optimised human legibility and optimised machine readability

These two are impossible to reconcile in a single grammar. Consequently two grammars are developed, one for human legibility and the other for optimised machine processing. However, both must be human readable.

Semantic translatability

A model presented in the optimised grammar must be translatable directly to the machine representation without loss of semantics. In the ensuing paragraphs the human optimised grammar is illustrated but in the final language specification both are presented side by side.

Human oriented grammar

A language based grammatical approach is taken, with the English language grammar initially, namely the modelling of data via sentences consisting of subject, predicate and object in that order. Legibility and machine parsing is assisted by punctuation.

A terse approach is taken to grammar i.e. avoiding ambiguous flowery language. Thus RDF triples form the basis of the model.

Semantic triples

Predicates form the basis of semantic interpretation and predicates are used as atomic entities that have identifiers. Predicate identifiers are recognisably related to their meaning but are given further definition via prose for background. Subjects and objects may have identifiers which may or may not be meaningful. As subjects and objects operate as nodes, and nodes require edges, thus predicates are used as the edges in a graph.

To make sense of the language subjects require constructs that include predicate object lists, object lists, and objects which are themselves defined by predicates. Put together with the terse language requirement, the grammar used in the human language is TURTLE. The following snip illustrates the main structures together with punctuation.

Subject1

Predicate1 Object1;

Predicate2 Object2; # predicate object list separated by ';'

Predicate3 (Object3,

Object4,

Object5); #object list enclosed by '()'

Predicate4 [ # unidentified object enclosed []

Predicate5 Object6;

Predicate6 Object7

]

. # end of sentence

Subject2 Predicate1 Object1. #Simple triple

Identifiers

Nodes and edges (subjects, predicates and objects) may be identified and the identifers used elsewhere.

Identifiers are universal and presented in one of the following forms:

- Full IRI (International resource identifier) which is the fully resolved identifier encompassed by <>

- Abbreviated IRI a Prefix which is resolved to an IRI followed by the local name which whan appended to the prefix becomes an full IRI

- Alias. Used by applications that have a close affinity to a particular information model instance, the aliases being mapped to the full IRIs

UUIDs are not used within the model but of course may be used in instances of data.

Semantic context

The interpretation of a structure is often dependent on the preceding predicate. Because the language is semantically constrained to the profiles of the sublanguages, certain punctuations can be semantically interpreted in context. For example, as the language incorporates OWL EL but not OWL DL, Object Intersection (and) is supported but not Object union.

This allows for the use of a collection construct, for example in the following equivalent definitions of a grandfather, in the first example the grandfather is an equivalent to an intersection of a person and someone who is male and has children (i.e. the '(x, y;z)' and the second one is an intersection of a person, something that is male, and someone that has children (x,y,z). Both interpretations assume AND as the operator because OR is not supported at this point in OWL EL.

Grandfather

isEquivalentTo (

Person, #ObjectIntersection

[ #Anonymous class

hasGender Male;

hasChild (Person, #ObjectIntersection

hasChild Person)

].

or Grandfather

isEquivalentTo ( Person, #ObjectIntersection

hasGender Male, #Anonymous class

hasChild (

Person, #ObjectIntersection

hasChild Person)

].

Machine oriented grammar

JSON is a popular syntax currently and thus this is used as an alternative.

JSON represents subjects , predicates and objects as object names and values with values being either literals or or objects.

JSON itself has no inherent mechanism of differentiating between different types of entities and therefor JSON-LD is used. In JSON-LD identifiers resolve initially to @id and the use of @context enables prefixed IRIs and aliases.

The above Grandfather can be represented in JSON as follows:

{"@id" : "Grandfather",

"EquivalentTo" :[{ "@id":"Person"},

{"hasGender": {"@id":"Male"}},

{"hasChild": [{"@id":"Person"},

{"hasChild" : {"@id":"Person"}}]]}}

Which is equivalent to the version 2 syntax

{"iri" : "Grandfather",

"EquivalentTo" :[

{"Intersection":[

{ "Class": {"iri":"Person"}},

{"ObjectPropertyValue": {

"Property": {"iri":"hasGender"},

"ValueType": {"iri":"Male"}

}},

{"ObjectPropertyValue": {

"Property":{"iri":"hasChild",

"Expression":{"Intersection":[

{"Class":{"iri:"Person"}},

{"ObjectPropertyValue":{

"Property":{"iri": hasChild},

"ValueType": {"iri":"Person"} } ] } } ]

High level aspects of the language

Concepts

Common to all of the language is the modelling abstraction "concept", which is an idea that can be defined, or at least described. All classes, and properties and data types in a model are represented as concrete classes which are subtypes of a concept. In line with semantic web standards a concept is represented in two forms:

Concepts are used are used as subjects predicates and objects and both atomic and complex concepts called class expressions are made up of other concepts.

The language vocabulary also includes specialised types of predicates, effectively used as reserved words. For example, the ontology uses a type of predicate known as an Axiom which makes statements about the concept. For example the axiom "is a subclass of" is used state that class A is entailed by class B. A data model may use a specialised predicate "target class" to state the class which the shape is describing and constraining, for a particular business purpose. The content of these vocabularies are dictated by the grammar specification but the properties and their purpose are derived directly from the sublanguages.

Context

Data is considered to be linked across the world, which means that IRIs are the main identifiers. However, IRIs can be unwieldy to use and some of the languages such as GRAPH-QL do not use them. Furthermore, when used in JSON, (the main exchange syntax via APIs) they can cause significant bloat. Also, identifiers such as codes or terms have often been created for local use in local single systems and in isolation are ambiguous.

To create linked data from local identifiers or vocabulary, the concept of Context is applied. The main form of context in use are

- PREFIX declaration for IRIs, which enable the use of abbreviated IRIs. This approach is used in OWL, RDF turtle, SHACL and Discovery itself.

- VOCABULAR CONTEXT declaration for both IRIs and other tokens. This approach is used in JSON-LD which converts local JSON properties and objects into linked data identifiers via the @context keyword

- MAPPING CONTEXT definitions for system level vocabularies. This provides sufficient context to uniquely identify a local code or term by including details such as the health care provider, the system and the table within a system. In essence a specialised class with the various property values making up the context.

Sub languages

The Discovery language, as a mixed language, has its own grammars as below, but in addition the language sub components can be used in their respective grammars and syntaxes. This enables multiple levels of interoperability, including between specialised community based languages and more general languages.

For example, the Snomed-CT community has a specialised language "Expression constraint language" (ECL), which can also be directly mapped to OWL2 and Discovery, and thus Discovery language maps to the 4-6 main OWL syntaxes as well as ECL. Each language has it's own nuances ,usually designed to simplify representations of complex structures. For example, in ECL, the reserved word MINUS (used to exclude certain subclasses from a superclass) , maps to the much more obscure OWL2 syntax that requires the modelling of class IRIs "punned" as individual IRIs in order to properly exclude instances when generating lists of concepts.

This sort of arcane mapping is avoided by use of entailment and query syntax. ECL is an example of a grammar that implies inference in an open world but also constrains relationships in a closed world view.

Discovery language has its own Grammars which include:

- A terse abbreviated language, similar to Turtle

- Proprietary JSON based grammar. Which directly maps to the internal class structures used in Discovery and used by client applications that have a strong contract with a server.

- An open standard JSON-LD representation used by systems that prefer JSON and are able to resolve identifiers via the JSON-LD context structure.

Because the information models are accessible via APIs, this means that systems can use any of the above, or exchange information in the specialised standard sublanguages which are:

- Expression constraint language (ECL) with its single string syntax

- OWL2 EL presented as functional syntax, RDF/XML, Manchester, JSON-LD

- SHACL presented as JSON-LD

- GRAPHQL presented as JSON-LD (GraphQL-LD) or GraphQL natively

Semantic Ontology

Supporting article Discovery semantic ontology language

The semantic ontology subsumes OWL2 EL.

OWL2, like Snomed-CT, forms the logical basis for semantic definition and axioms for inferencing .OWL2 subsets of Discovery are available in the Discovery syntaxes or the OWL 2 syntaxes.

In its usual use, OWL2 EL is used for reasoning and classification via the use of the Open world assumption. In effect this means that OWL2 can be used to infer X from Y which forms the basis of most subsumption or entailment queries in healthcare.

Note. In theory, OWL2 DL can also used to model property domains and ranges so that then may be used as editorial policies. Where classic OWL2 DL normally models domains of a property in order to infer the class of a certain entity, one can use the same grammar for use in editorial policies i.e. only certain properties are allowed for certain classes. However, this represents a misuse of the OWL grammar i.e. use of the syntax to mean something else. Therefore SHACL is used for editorial policies.

For example, where OWL2 may say that one of the domains of a causative agent is an allergy (i.e.an unknown class with a property of causative agent is likely to be an allergy), in the modelling the editorial policy states that an allergy can only have properties that are allowed via the property domain. Thus the Snomed MRCM could be modelled in OWL2 DL. However, the SHACL construct of targetObjectOf and targetSubject Of are used as a constraint.

Thus only existential quantification and object Object intersections are use for reasoning. Cardinality is likewise not required.

The ontology in theory supports the OWL2 syntaxes such as the Functional syntax and Manchester syntax, but can be represented by JSON-LD or the Discovery JSON based syntax, as part of the full information modelling language. Of particular value is the Inverse property of axiom as this can then be used when examining data model properties.

Together with the query language, OWL2 makes the language compatible also with Expression constraint language which is used as the standard for specifying Snomed-CT expression query.

The ontologies that are modelled are considered as modular ontologies. it is not expected that one "mega ontology" would be authored but that there would be maximum sharing of concept definitions (known as axioms) which results in a super ontology of modular ontologies.

Data modelling as shapes

Data models , model classes and properties according to business purposes. This is a different approach to the open world assumption of semantic ontologies.

To illustrate the difference, take the modelling of a human being or person.

From a semantic perspective a person being could be said to be an equivalent to an animal with a certain set of DNA (nuclear or mitochondrial) and perhaps including the means of growth or perhaps being defined at some point before, at the start of, or sometime after the embryonic phase. One would normally just state that a person is an instance of a homo sapiens and that homo sapiens is a species of.... etc.

From a data model perspective we may wish to model a record of a person. We could say that a certain shape is "a record of" a person. In SHACL this is referred to as "targetClass". The shape will have one date of birth, one current gender, and perhaps a main residence. Cardinality is expected.

SCHACL is used inherently, although consideration is given to its community cousin Shex.

The difference is between the open and close world and the model of the person is a constraint on the possible (unlimited) properties of a person.

A particular data model is a particular business oriented perspective on a set of concepts. As there are potentially thousands of different perspectives (e.g. a GP versus a geneticist) there are potentially unlimited number of data models. All the data models modelled in Discovery share the same atomic concepts and same semantic ontological definitions across ontologies where possible, but where not, mapping relationships are used.

The binding of a data model to its property values is based on a business specific model. For example a standard FHIR resource will map directly to the equivalent data model class, property and value set, whose meaning is defined in the semantic ontology, but the same data may be carried in a non FHIR resource without loss of interoperability.

A common approach to modelling and use of a standard approach to ontology, together with modularisation, means that any sending or receiving machine which uses concepts from the semantic ontology can adopt full semantic interoperability. If both machines use the same data model for the same business, the data may presented in the same relationship, but if the two machines use different data models for different businesses they may present the data in different ways, but without any loss of meaning or query capability.

The integration between data model shapes and ontological concepts makes the information model very powerful and is the singe most important contributor to semantic interoperability,

Data mapping

This part of the language is used to define mappings between the data model and an actual schema to enable query and filers to automatically cope with the ever extending ontology and data properties.

This is part of the semantic ontology but uses the idea of context (described later on).

Query

It is fair to say that data modelling and semantic ontology is useless without the means of query.

The current approach to the specification of query uses the GRAPHQL approach with type extensions and directive extensions.

Graph QL , (despite its name) is not in itself a query language but a way of representing the graph like structure of a underlying model that has been built using OWL. GRAPH QL has a very simple class property representation, is ideal for REST APIs and results are JSON objects in line with the approach taken by the above Discovery syntax.

Nevertheless, GRAPHQL considers properties to be functions (high order logic) and therefore properties can accept parameters. For example, a patient's average systolic blood pressure reading could be considered a property with a single parameter being a list of the last 3 blood pressure readings. Parameters are types and types can be created and extended.

In addition GRAPHQL supports the idea of extensions of directives which further extend the grammar.

Thus GRAPHQL capability is extended by enabling property parameters as types to support such things as filtering, sorting and limiting in the same way as an.y other query language by modelling types passed as parameters. Subqueries are then supported in the same way.

GRAPHQL itself is used when the enquirer is familiar with the local logical schema i.e. understands the available types and fields. In order to support semantic web concepts an extension to GRAPHQL, GRAPHQL-LD is used, which is essentially GRAPH-QL with JSON-LD context.

GRAPH QL-LD has been chosen over SPARQL for reasons of simplicity and many now consider GRAPHQL to be a de-facto standard. However, this is an ongoing consideration.

ABAC language

Main article : ABAC Language

The Discovery attribute based access control language is presented as a pragmatic JSON based profile of the XACML language, modified to use the information model query language (SPARQL) to define policy rules. ABAC attributes are defined in the semantic ontology in the same way as all other classes and properties.

The language is used to support some of the data access authorisation processes as described in the specification - Identity, authentication and authorisation .

This article specifies the scope of the language , the grammar and the syntax, together with examples. Whilst presented as a JSON syntax, in line with other components of the information modelling language, the syntax can also be accessed via the ABAC xml schema which includes the baseline Information model XSD schema on the Endeavour GitHub, and example content viewed in the information manager data files folder