| Line 116: | Line 116: | ||

The sections in [[Inbound Data Transform Specifications#Resource mapping|Resource mapping]] list all the fields for each resource type that the DDS uses. | The sections in [[Inbound Data Transform Specifications#Resource mapping|Resource mapping]] list all the fields for each resource type that the DDS uses. | ||

Each field requires you to describe where that field can be found in the source data and what, if any, mapping or formatting is required. | {{Note| Each field requires you to describe where that field can be found in the source data and what, if any, mapping or formatting is required.}} | ||

Links to the original FHIR definitions on the HL7 website are also provided, to give more detailed explanations on what each resource is for. | |||

{{Info| Field names in this article may not be the same as on the HL7 website and or may not even be on the HL7 website at all – DDS uses a number of FHIR extensions to add additional fields to each resource.}} | |||

== Resource mapping == | == Resource mapping == | ||

Revision as of 11:08, 22 April 2021

Introduction

This article forms the basis of the technical implementation of any new inbound transform of data into the Discovery Data Service (DDS), specifically for non-transactional flat file formats.

The aim is to ask potential publishers to consider and answer the following questions:

- How will the source data be sent to DDS? Will it be an SFTP push or pull? Will the files be encrypted? See Secure publication to the DDS for currently supported options.

- How often will the source data be sent to DDS? Will it be sent in a daily extract? See Latency of extract data feeds to see how often and when data is currently received, and how that relates to the data available in the DDS.

- How should the source data be mapped to the FHIR-based DDS data format? What file contains patient demographics? What column contains first name? What column contains middle names? See Publishers and mapping to the common model for more information.

- How should any value sets should be mapped to equivalent DDS value sets? What values are used for different genders and what FHIR gender does each map to?

- What clinical coding systems are used? Are clinical observations recorded using SNOMED CT, CTV3, Read2, ICD-10, OPCS-4 or some other nationally or locally defined system?

- How are source data records uniquely identified within the files? What is the primary identifier/key in each file and how do files reference each other?

- How are inserts, updates and deletes represented in the source data? Is there a 'deleted' column?

- Is there any special knowledge required to accurately process the data?

- Will the publishing of data be done in a phased approach? Will the demographics feed be turned on first, with more complex clinical structures at a later date?

- What files require bulk dumps for the first extract and which will start from a point in time? Will a full dump of organisational data be possible? Will a full dump of master patient list be possible?

- Is there any overlap with an existing transform supported by DDS? Does the feed include any national standard file that DDS already supports?

Questions that need to be answered are highlighted throughout this article:

| This is a question that needs to be answered. |

The understanding of the answers provided can then be used by the DDS technical team to implement the technical transformation for the source data.

Completion and sign off of the specifications is a collaborative process, involving the data publisher (or their representatives) and the DDS technical team; the data publisher has a greater understanding of their own data, and the DDS technical team have experience of multiple and differing transformations.

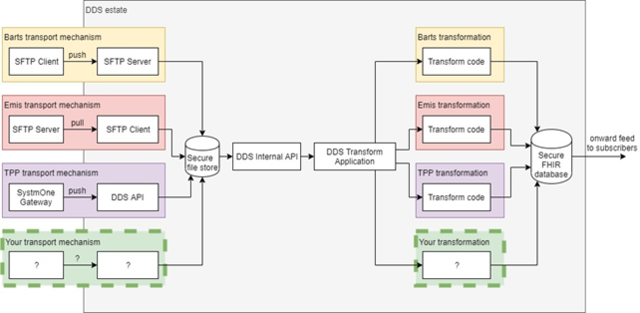

DDS inbound overview

This diagram provides an overview of the way the DDS processes a sample of existing inbound data feeds. Each data publisher has their own transport mechanism for getting data into the DDS estate (SFTP push, SFTP pull, bespoke software) which is then sent through a common pipeline that performs a number of checks (most importantly validating that DDS has a data processing agreement to accept the data). Once the checks are passed, the data is handed over to the DDS transformation application, that then invokes the relevant transform code for the published data format. The result of the transformation code is standardised FHIR that is saved to the DDS database; this may be further transformed for any subscribers to that publisher’s data.

Unless there are exceptional circumstances that preclude it, all DDS software is written in Java, running on Ubuntu.

| the image represents the logical flow of data into the DDS, rather than the distinct software components themselves. |

Publishing process

The end to end process for publishing new data into the DDS generally follows the below steps. This isn’t necessarily a fixed-order list, as many of the steps can be performed out of order or in parallel to other steps, but it covers all the actions that need to happen.

- Agree extract transport mechanism and frequency.

- Define project phases based on data types and dependencies.

- Set up transport mechanism and test (this can be done at any point before live data is sent).

- For the first phase:

- Provide file extract format specification.

- Provide sample data.

- Validate and assure the published data.

- Define how inserts, updates and deletes are represented.

- Define transformation mapping.

- List any known special cases.

- Sign off transform specification document.

- Implement transform code to specification.

- Validate and assure new code using sample data (if possible).

- Start live feed of data, bulk followed by deltas (data will be securely stored but won’t flow into production DDS yet).

- Validate and assure code using subset of live data (in secure pre-production environment).

- Deploy new code to production DDS and start processing data.

- At this point, this phase is now considered live.

- If required, repeat step 4 for the subsequent phases.

Assurance and sign-off

Assurance and sign-off is required at three distinct stages of the publishing process:

Extract assurance

The data publisher must assure that their data extract accurately represents the data in the source clinical software.

How this assurance is done will vary from publisher to publisher, although the DDS team may be able to provide some guidance on how to conduct this.

Transform specification sign-off

Both the publisher and DDS team must agree that the completed transform specification document is accurate and sufficient to implement the transformation code.

This will be done when both parties agree that the document has been sufficiently completed.

Transform assurance

Once the transformation implementation is complete, it must be assured that it can accurately transform the source data into the DDS format.

This will generally be done in two passes, once on sample data and a second time on live data. This will involve comparing the data in the pre-production DDS against the source software.

Transport mechanism & frequency

The goal of the transport mechanism is to securely send the extract files into the DDS estate (hosted in AWS with HSCN connectivity). The frequency defines how often files are sent.

For context, these are examples of existing publisher scenarios:

- A daily extract of 100+ files but staggered throughout the day. As each file is produced it is uploaded to a DDS SFTP server over HSCN (secured with an SSH key). As the SFTP server receives new files, these are moved into the secure file store. At midnight, the files received that day are sent for transformation.

- A daily extract of 20 files. The files are PGP compressed and encrypted and made available on an SFTP server the publisher hosts on HSCN (secured with an SSH key). Each hour, a DDS SFTP client polls the SFTP server for new files. When new files are detected, they are downloaded and decrypted, then stored in the DDS secure file store. Once DDS has received the full set of 20 daily files, the new files are sent for transformation.

- A single daily zip file using their own client software to a Windows PC workstation hosted within a CCG building. A small DDS-written application runs on the workstation and detects each new extract file, which is then uploaded via HTTPS over HSCN to a DDS API. The DDS API unzips the file and stores the decompressed files in the secure file store, which are then sent for transformation.

| How many files will be produced in your extract and how often, and how you see them being transported to the DDS? |

| It is recommended that any transport be done using SFTP, secure using an SSH key and over HSCN. For further security, it is also recommended that extract files be encrypted themselves before transport. |

Scheduling and dependencies

Depending on the complexity of the published data it may be necessary to split the implementation into several phases, each phase introducing new data to the DDS. For example, if a secondary care publisher extract contains data on organisational metadata, patient demographics, procedures, diagnoses, and clinical observations, it may be more efficient to break the implementation into several discrete phases for implementation; For example, doing the full procedures implementation before doing conditions, and then finally moving on to observations.

However, because some data naturally depends on other data, the dependencies must be understood to know what data items must be done before others. For example:

- Organisational metadata is always required first, since everything depends on organisations, locations and clinicians.

- Patient demographics are always required next, since all other clinical data depends on matching to patient records.

- A&E attendances, inpatient stays and outpatient appointments are generally required next.

- Finally, procedures, conditions and observations can be interpreted.

| Is scheduling necessary? What are the dependencies within the data? |

| The scope of the first phase should now be agreed. |

Extract file specification

| Can you supply technical extract documentation such as file specifications? |

Sample data

| Can you provide sample data? |

| Sample data should include fictitious patients only but be as realistic as possible. It should also be in the exact same format as the planned extract. |

Inserts, updates, and deletes

The ideal scenario is that each file within the extract is sent initially as a bulk extract followed by regular deltas (differences only), with clear indication of which records are new, which are updates, and which are deleted.

| Can you explain how the extract represents updates and deletes to data and whether it will be possible to follow the bulk, then deltas pattern? |

Transformation mapping

All inbound DDS transformations, for different source formats, output FHIR using a DDS-specific profile. Approximately 20 different FHIR resources are used in DDS to date. Each FHIR resource has many fields of different types, some mandatory, some not.

The sections in Resource mapping list all the fields for each resource type that the DDS uses.

| Each field requires you to describe where that field can be found in the source data and what, if any, mapping or formatting is required. |

Links to the original FHIR definitions on the HL7 website are also provided, to give more detailed explanations on what each resource is for.

| Field names in this article may not be the same as on the HL7 website and or may not even be on the HL7 website at all – DDS uses a number of FHIR extensions to add additional fields to each resource. |