Health Information modelling language - overview: Difference between revisions

DavidStables (talk | contribs) |

DavidStables (talk | contribs) |

||

| Line 54: | Line 54: | ||

The remainder of this article describes the language itself, starting with some high level sections on the components, and eventually providing a specification of the language and links to technical implementations, all of which are open source. | The remainder of this article describes the language itself, starting with some high level sections on the components, and eventually providing a specification of the language and links to technical implementations, all of which are open source. | ||

== The main language constructs == | |||

This section describes some of the high level conceptual constructs used in the language. This does not describe the language grammar itself, as this is described later on. | |||

=== The Concept === | === The Concept === | ||

| Line 103: | Line 107: | ||

* GRAPHQL presented as JSON-LD(GraphQL-LD) or GraphQL natively | * GRAPHQL presented as JSON-LD(GraphQL-LD) or GraphQL natively | ||

*XACML presented as JSON | *XACML presented as JSON | ||

=== Semantic Ontology === | === Semantic Ontology === | ||

| Line 165: | Line 167: | ||

Nevertheless, GRAPHQL considers properties to be functions (high order logic) and therefore properties can accept parameters. For example, a patient's average systolic blood pressure reading could be considered a property with a single parameter being a list of the last 3 blood pressure readings. Parameters are types and types can be created and extended. | Nevertheless, GRAPHQL considers properties to be functions (high order logic) and therefore properties can accept parameters. For example, a patient's average systolic blood pressure reading could be considered a property with a single parameter being a list of the last 3 blood pressure readings. Parameters are types and types can be created and extended. | ||

In addition GRAPHQL supports the idea of extensions of directives | In addition GRAPHQL supports the idea of extensions of directives which further extend the grammar. | ||

Thus GRAPHQL capability is extended by enabling property parameters as types to support such things as filtering, sorting and limiting in the same way as an.y other query language by modelling types passed as parameters. Subqueries are then supported in the same way. | Thus GRAPHQL capability is extended by enabling property parameters as types to support such things as filtering, sorting and limiting in the same way as an.y other query language by modelling types passed as parameters. Subqueries are then supported in the same way. | ||

GRAPH QL has been chosen over SPARQL for reasons of simplicity and | GRAPHQL itself is used when the enquirer is familiar with the local logical schema i.e. understands the available types and fields. In order to support semantic web concepts an extension to GRAPHQL, GRAPHQL-LD is used, which is essentially GRAPH-QL with JSON-LD context. | ||

GRAPH QL-LD has been chosen over SPARQL for reasons of simplicity and many now consider GRAPHQL to be a de-facto standard. However, this is an ongoing consideration. | |||

=== ABAC language === | === ABAC language === | ||

<br /> | <br /> | ||

Revision as of 12:49, 27 December 2020

Please note. The information in this section represents a specification of intent and work in progress. Actual implementations using the language have partial implementation of the grammars and syntaxes described here.

Background and rationale

Question: Yet another language? Surely not.

Answer: Nor, or at least, not quite.

Firstly, it is worth considering what the purpose of a modelling language is.

The main purpose of a modelling language is to exchange data and information about data models in a way that both machines and humans can understand. A purely machine based language would be no more than a series of binary bits, perhaps recognisable as hexadecimal digits. A purely human based language would be ambiguous as all human languages are. A language that is both can be used to promote a shared understanding of often complex structures whilst enabling machines to process data in a consistent way.

The Discovery modelling language serves this purpose in an unusual way. Instead of a new language, it can be considered "a mixed language" representing a convergence of modern semantic web based modelling languages". The language is used as a means of eliminating the conflicting grammars and syntaxes from different open standard modelling languages. It does so by applying the relevant parts of the different languages (or profiles), using a set of conventions, to achieve an integrated whole. The language itself supports its own grammar, making pragmatic decisions about the, but the constituent languages can be used in their native form also as they are directly translatable.

It is worth looking at the background from a historical perspective, looking back over he last 40 years or so.

Prior to the semantic web idea , information modelling was considered in either hierarchical or relational terms. Healthcare informatics adopted the hierarchical approach, which resulted in the partial adoption of standards such as EDIFACT and HL7, or in some cases home grown XML compliant representations such as used in the NHS Data Dictionary.

Following the proposed Semantic Web, the publication of resource descriptor framework (RDF and subsequent RDFS) and OWL brought together the fundamentals of spoken language grammar such as Subject/Predicate/Object, with the mathematical constructs of description logic and graph theory. Since then a plethora of W3C grammars have evolved, each designed to tackle different aspects of data modelling.

All of these show a degree of convergence in that they are all based on the same fundamentals which include graph and description logic. There is a tendency towards the use of an IRI(International resource identifier) to represent concepts and the use of graphs to model relationships. However their grammars and syntaxes create tensions between the different perspectives on seemingly similar concepts. These different perspectives are gradually being harmonised but remain incompatible in places.

Rather than propose a new harmonised language, the Discovery modelling language is designed to demonstrate a real world practical application of a convergent approach to modelling using a set of multi-organisational health records covering a population of millions citizens. This combined language enables a single integrated approach to modelling data whilst at the same time supporting interoperable standards based languages used for the various different specialised purposes. The open community based languages, and their various syntaxes, can thus be considered specialised sub languages of the Discovery language.

The main language component areas

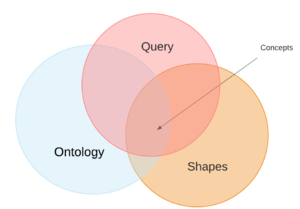

The language is designed to support the 3 main purposes of information modelling, which are: Inference, validation and enquiry.

To support these purposes, the language is used to model 3 main types of constructs: Ontology, Data model (or shapes), and Query.

It is not necessary to understand the standard languages used in order to understand the modelling or use Discovery, but for those who have an interest, and have a technical aptitude, the best places to start are with OWL2, SHACL, SPARQL, GRAPHQL and specialised use case based constructs such as ABAC. For those who want to get to grips with underlying logic, the best place to start is First order Logic, Description logic, and an understanding of at least one programming language like C# Java, Java script, Python etc + any query language such as SQL.

The only purpose of a language is to help create, maintain, and represent information models and thus how the languages are used are best seen in the sections on the Information model.

Language and the information models

The language (or languages) are a means to an end i.e. a human and machine readable means of exchanging information models and use of the language to interact with implementations of health records.

An information model is an abstract representation of data but an information model must have content and that content must be stored.

Data cannot be stored conceptually, only physically, and thus there must be a relationship between the abstract model and the physical store.

An abstract model is best instantiated as a set of objects, which are instances of classes. In reality those objects are instantiated in some form of language. e.g. Java.

The model can then be used as the source and target of the exchange of data, the latter using a language interoperating via a set of APIs

This can be visualised as in the diagram on the right. It can be seen that the inner physical store, is accessed by an object model layer, which is itself accessed by APIs using modelling language grammar and syntax. The diagram shows the main grammars supported by the Discovery information model, including the Discovery information modelling language grammar itself.

Support for the main languages means that a Discovery information model instance has 2 levels of separation of concerns from the languages used to exchange data, and the languages are industry standards (or industry adopted), thus the models are therefore interoperable. There is no reason to buy into Discovery language to use the information model.

The remainder of this article describes the language itself, starting with some high level sections on the components, and eventually providing a specification of the language and links to technical implementations, all of which are open source.

The main language constructs

This section describes some of the high level conceptual constructs used in the language. This does not describe the language grammar itself, as this is described later on.

The Concept

Common to all of the language is the modelling abstraction "concept", which is an idea that can be defined, or at least described. All classes and properties in a model are represented as concepts. In line with semantic web standards a concept is represented in two forms:

- A named concept, the name being an International resource identifier IRI. A concept is normally also annotated with human readable labels such as clinical terms, scheme dependent codes, and descriptions.

- An unnamed (anonymous) concept, which is defined by an expression, which itself is made up of named concepts or expressions

The information model itself is a graph. Thus the IRIs can also be said to be nodes or edge and the anonymous concepts used as anonymous nodes, something which many of the languages support.

Concepts are specialised into classes or properties and there is a wide variety of types and purposes of properties.

The language vocabulary also includes specialised types of properties, effectively used as reserved words. For example, the ontology uses a type of property known as an Axiom which states the definition of a concept, for example the axiom "is a subclass of" to state that class A is entailed by class B. A data model may use a specialised property "target class" to state the class which the shape is describing and constraining, for a particular business purpose. The content of these vocabularies are dictated by the grammar specification but the properties and their purpose are derived directly from the sublanguages.

Context

Data is considered in a linked form, which means that IRIs are the main identifiers. However, IRIs can be unwieldy to use and some of the languages such as GRAPH-QL do not use them. Furthermore, when used in JSON, (the main exchange syntax via APIs) they can cause significant bloat. Also, identifiers themselves have often been created for local use in local single systems.

To create linked data from local identifiers or vocabulary, the concept of Context is applied. The main form of context in use are

- PREFIX declaration for IRIs, which enable the use of abbreviated IRIs. This approach is used in OWL, RDF turtle, SHACL and Discovery itself.

- VOCABULAR CONTEXT declaration for both IRIs and other tokens. This approach is used in JSON-LD which converts local JSON properties and objects into linked data identifiers via the @context keyword

- MAPPING CONTEXT definitions for system level vocabularies. This provides sufficient context to uniquely identify a local code or term by including details such as the health care provider, the system and the table within a system.

Grammars and syntaxes

The Discovery language, as a mixed language, has its own grammars as below, but in addition the language sub components can be used in their respective grammars and syntaxes. This enables multiple levels of interoperability, including between specialised community based languages and more general languages.

For example, the Snomed-CT community has a specialised language "Expression constraint language" (ECL), which can also be directly mapped to OWL2 and Discovery, and thus Discovery language maps to the 4-6 main OWL syntaxes as well as ECL. Each language has it's own nuances ,usually designed to simplify representations of complex structures. For example, in ECL, the reserved word MINUS (used to exclude certain subclasses from a superclass) , maps to the much more obscure OWL2 syntax that requires the modelling of class IRIs "punned" as individual IRIs in order to properly exclude instances when generating lists of concepts.

Discovery language has its own Grammars which include:

- A human natural language approach to describing content, presented as optional terminal literals to the terse language

- A terse abbreviated language, similar to Turtle

- Proprietary JSON based grammar. Which directly maps to the internal class structures used in Discovery

- An open standard JSON-LD representation

Because the information models are accessible via APIs, this means that systems can use any of the above, or exchange information in the specialised standard sublanguages which are:

- Expression constraint language (ECL) with its single string syntax

- OWL2 DL presented as functional syntax, RDF/XML, Manchester, JSON-LD

- SHACL presented as JSON-LD

- GRAPHQL presented as JSON-LD(GraphQL-LD) or GraphQL natively

- XACML presented as JSON

Semantic Ontology

Main article Discovery semantic ontology language

The semantic ontology subsumes OWL2 DL.

OWL2, like Snomed-CT, forms the logical basis for the static data representations, including semantic definition, data modelling and modelling of value sets.OWL2 subsets of Discovery are available in the Discovery syntaxes or the OWL 2 syntaxes.

In its usual use, OWL2 EL is used for reasoning and classification via the use of the Open world assumption. In effect this means that OWL2 can be used to infer X from Y which forms the basis of most subsumption or entailment queries in healthcare.

OWL2 DL can also used to model property domains and ranges so that then may be used as editorial policies. Where classic OWL2 DL normally models domains of a property in order to infer the class of a certain entity, one can use the same grammar for use in editorial policies i.e. only certain properties are allowed for certain classes.

For example, where OWL2 may say that one of the domains of a causative agent is an allergy (i.e.an unknown class with a property of causative agent is likely to be an allergy), in the modelling the editorial policy states that an allergy can only have properties that are allowed via the property domain. Thus the Snomed MRCM can be modelled in OWL2 DL

The grammar for the semantic ontology language used for reasoning is OWL EL, which is limited profile of OWL DL. Thus only existential quantification and object Object intersections are use for reasoning. However the language is also used for some aspects of data modelling and value set modelling which requires OWL2 DL as the more expressive constructs such as union (ORS) are required.

As such the ontology supports the OWL2 syntaxes such as the Functional syntax and Manchester syntax, but can be represented by JSON-LD or the Discovery JSON based syntax, as part of the full information modelling language.

Together with the query language, OWL2 DL makes the language compatible also with Expression constraint language which is used as the standard for specifying Snomed-CT expression query.

Ontology purists will notice that modelling a "content model" in OWL2 is in fact a breach of the fundamental open world assumption view of the world taken in ontologies and instead applies the closed world assumption view instead. Consequently, the sublanguage used for data modelling uses OWL for inferencing (open world) but SHACL for describing the models (closed world).

The ontologies that are modelled are considered as modular ontologies. it is not expected that one "mega ontology" would be authored but that there would be maximum sharing of concept definitions (known as axioms) which results in a super ontology of modular ontologies.

Data modelling and shapes

Data models , model classes and properties according to business purposes. This is a different approach to the open world assumption of semantic ontologies.

To illustrate the difference, take the modelling of a human being or person.

From a semantic perspective a person being could be said to be an equivalent to an animal with a certain set of DNA (nuclear or mitochondrial) and perhaps including the means of growth or perhaps being defined at some point before, at the start of, or sometime after the embryonic phase. One would normally just state that a person is an instance of a homo sapiens and that homo sapiens is a species of.... etc.

From a data model perspective we may wish to model a record of a person. We could say that a record of a person models a person, and will have one date of birth, one current gender, and perhaps a main residence.

The difference is between the open and close world and the model of the person is a constraint on the possible (unlimited) properties of a person.

A particular data model is a particular business oriented perspective on a set of concepts. As there are potentially thousands of different perspectives (e.g. a GP versus a geneticist) there are potentially unlimited number of data models. All the data models modelled in Discovery share the same atomic concepts and same semantic ontological definitions across ontologies where possible, but where not, mapping relationships are used.

The binding of a data model to its property values is based on a business specific model. For example a standard FHIR resource will map directly to the equivalent data model class, property and value set, whose meaning is defined in the semantic ontology, but the same data may be carried in a non FHIR resource without loss of interoperability.

A common approach to modelling and use of a standard approach to ontology, together with modularisation, means that any sending or receiving machine which uses concepts from the semantic ontology can adopt full semantic interoperability. If both machines use the same data model for the same business, the data may presented in the same relationship, but if the two machines use different data models for different businesses they may present the data in different ways, but without any loss of meaning or query capability.

The integration between data model shapes and ontological concepts makes the information model very powerful and is the singe most important contributor to semantic interoperability,

Data mapping

This part of the language is used to define mappings between the data model and an actual schema to enable query and filers to automatically cope with the ever extending ontology and data properties.

This is part of the semantic ontology but uses the idea of context (described later on).

Query

It is fair to say that data modelling and semantic ontology is useless without the means of query.

The current approach to the specification of query uses the GRAPHQL approach with type extensions and directive extensions.

Graph QL , (despite its name) is not in itself a query language but a way of representing the graph like structure of a underlying model that has been built using OWL. GRAPH QL has a very simple class property representation, is ideal for REST APIs and results are JSON objects in line with the approach taken by the above Discovery syntax.

Nevertheless, GRAPHQL considers properties to be functions (high order logic) and therefore properties can accept parameters. For example, a patient's average systolic blood pressure reading could be considered a property with a single parameter being a list of the last 3 blood pressure readings. Parameters are types and types can be created and extended.

In addition GRAPHQL supports the idea of extensions of directives which further extend the grammar.

Thus GRAPHQL capability is extended by enabling property parameters as types to support such things as filtering, sorting and limiting in the same way as an.y other query language by modelling types passed as parameters. Subqueries are then supported in the same way.

GRAPHQL itself is used when the enquirer is familiar with the local logical schema i.e. understands the available types and fields. In order to support semantic web concepts an extension to GRAPHQL, GRAPHQL-LD is used, which is essentially GRAPH-QL with JSON-LD context.

GRAPH QL-LD has been chosen over SPARQL for reasons of simplicity and many now consider GRAPHQL to be a de-facto standard. However, this is an ongoing consideration.